- Oct 22, 2017

For a while now, law firms have been slow to adopt technology assisted review despite studies proving its effectiveness and approval from the courts. In the August 20, 2015 Tip of the Night I noted that in a webinar hosted by Exterro, Chief Judge Joy Conti of the United District Court for the Western District of Pennsylvania noted that, despite the developments in Rio Tinto v. Vale encouraging transparency between parties with respect to predictive coding seed sets, she has not seen many predictive coding cases in her court.

Last night, I took the Electronic Discovery Institute's course on Search and Review of Electronically Stored Information. Laura Grossman, a well known expert on technology assisted review stated during the course that, "Manual review and technology assisted review are being held to different standards. One would never have gotten a request to show the other side your coding manual, or have them come visit your review center, and lean over the shoulders of your contract reviewers to see whether they were marking documents properly - responsive - not responsive. I don't remember ever being asked for metrics - precision / recall of a manual production - but I think people are scared of the technology. There are many myths if three documents are coded incorrectly that the technology will go awry and be biased or miss all of the important smoking guns. But humans miss smoking guns also. And, so, I do think it's being held to a different standard. I think that creates disincentives to use the technology because if you're going to get off scot-free with manual review and you're going to have to have hundreds of meet and confers and provide metrics and be extremely transparent and collaborative if you're using the other technology, for many clients it becomes very unattractive and their feeling is it's just easier to use the less efficient process."

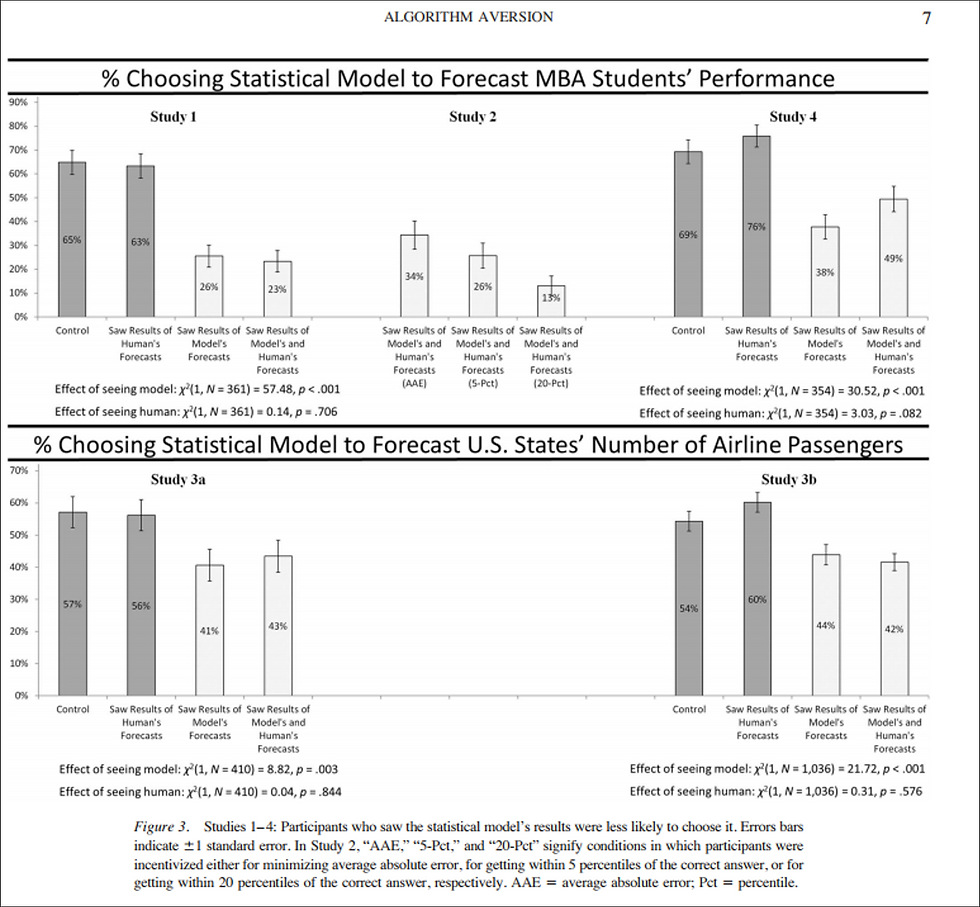

Judge Conti's observations and Ms. Grossman's theorizing are borne out by a study conducted by Berkeley J. Dietvorst, Joseph P. Simmons, and Cade Massey of the University of Pennsylvania, Algorithm Aversion: People Erroneously Avoid AlgorithmsAfter Seeing Them Err , Journal of Experimental Psychology (2014). The synopsis of the article states that:

"Research shows that evidence-based algorithms more accurately predict the future than do human forecasters. Yet when forecasters are deciding whether to use a human forecaster or a statistical algorithm, they often choose the human forecaster. This phenomenon, which we call algorithm aversion,is costly, and it is important to understand its causes. We show that people are especially averse to algorithmic forecasters after seeing them perform, even when they see them outperform a human forecaster. This is because people more quickly lose confidence in algorithmic than human forecasters after seeing them make the same mistake. "

The paper doesn't specifically address technology assisted review and the process of identifying responsive documents. It refers to use of algorithms in the college admissions process and in predicting the number of passengers on American airlines, and got results from a controlled study in which volunteer participants would have received a bonus if they used a statistical model rather than forecasts made by humans.

Dietvorst mentions the inability of algorithms to learn from experience; their inability to evaluate individual targets; and ethical qualms about having automated processes make important decisions as reasons for humans; aversion to adopting their use.

As the below graphs show, participants in the study were less likely to chose an algorithm not only when the saw the results of its forecasts, but also when they were able to compare its results against decisions made by humans. Seeing humans make mistakes when they know the statistical model could as well did not significantly encourage them to adopt the algorithm.